“Android MediaCodec trace”的版本间的差异

来自个人维基

free6d1823(讨论 | 贡献) |

free6d1823(讨论 | 贡献) |

||

| (未显示1个用户的14个中间版本) | |||

| 第20行: | 第20行: | ||

</source> | </source> | ||

| − | 2. setDisplay(SurfaceHolder sh) | + | 2. MediaPlayer.java: setDisplay(SurfaceHolder sh) or setSurface(Surface surface) |

<source lang="c"> | <source lang="c"> | ||

| − | + | => native void _setVideoSurface(Surface surface); | |

| − | + | => | |

| − | + | => /frameworks/base/media/jni/android_media_MediaPlayer.cpp: android_media_MediaPlayer_setVideoSurface(JNIEnv *env, jobject thiz, jobject jsurface) | |

| − | + | => setVideoSurface(JNIEnv *env, jobject thiz, jobject jsurface, jboolean mediaPlayerMustBeAlive) | |

| − | + | { | |

sp<IGraphicBufferProducer> new_st = surface->getIGraphicBufferProducer(); | sp<IGraphicBufferProducer> new_st = surface->getIGraphicBufferProducer(); | ||

| − | + | MediaPlyer getMediaPlayer()->setVideoSurfaceTexture(new_st); | |

| − | + | } | |

| − | + | => libmediaplayerservice/MediaPlayerService.cpp: MediaPlayerService::Client::setVideoSurfaceTexture(<IGraphicBufferProducer>& bufferProducer) | |

| + | { | ||

sp<ANativeWindow> anw; | sp<ANativeWindow> anw; | ||

| − | + | anw = new Surface(bufferProducer, true /* controlledByApp */); | |

| − | + | nativeWindowConnect(anw.get(), "setVideoSurfaceTexture"); | |

| − | + | ||

| − | + | ||

| − | + | getPlayer()->setVideoSurfaceTexture(bufferProducer); | |

| − | + | mConnectedWindow = anw; //decoder | |

| + | mConnectedWindowBinder = binder(IInterface::asBinder(bufferProducer)); | ||

} | } | ||

| − | } | + | => libmediaplayerservice/nuplayer/NuPlayerDriver.cpp: NuPlayerDriver::setVideoSurfaceTexture(&bufferProducer) |

| + | => NuPlayer::setVideoSurfaceTextureAsync(<IGraphicBufferProducer> &bufferProducer) | ||

| + | msg->post(kWhatSetVideoSurface, new Surface(bufferProducer, true )); | ||

| + | => void NuPlayer::onMessageReceived(const sp<AMessage> &msg: kWhatSetVideoSurface) | ||

| + | { | ||

| + | surface = <Surface *>(msg->findObject("surface")->obj.get()); | ||

| + | mVideoDecoder->setVideoSurface(surface); ==>msg(kWhatSetVideoSurface, surface)->postAndAwaitResponse(); | ||

| + | performSetSurface(surface); | ||

| + | mDeferredActions.push_back(new FlushDecoderAction(FLUSH_CMD_FLUSH /* audio */, FLUSH_CMD_SHUTDOWN /* video */)); | ||

| + | mDeferredActions.push_back(new SetSurfaceAction(surface)); | ||

| + | mDeferredActions.push_back(new ResumeDecoderAction()) | ||

| + | processDeferredActions(); | ||

| + | } | ||

| + | => NuPlayer::Decoder::onMessageReceived(const sp<AMessage> &msg, kWhatSetVideoSurface) | ||

| + | { | ||

| + | nativeWindowDisconnect(surface.get(), "kWhatSetVideoSurface(surface)"); | ||

| + | mCodec->setSurface(surface); | ||

| + | nativeWindowConnect(mSurface.get(), "kWhatSetVideoSurface(mSurface)"); | ||

| + | mSurface = surface; | ||

| + | |||

| + | } | ||

| + | => /frameworks/av/media/libstagefright/ACodec.cpp ACodec::setSurface(const sp<Surface> &surface) | ||

| + | { msg(kWhatSetSurface, surface)->postAndAwaitResponse(); } | ||

| + | => ACodec::BaseState::onMessageReceived(const sp<AMessage> &msg, kWhatSetSurface) | ||

| + | { mCodec->handleSetSurface(static_cast<Surface *>(obj.get())); } | ||

| + | => ACodec::handleSetSurface(const sp<Surface> &surface) | ||

| + | { | ||

| + | ANativeWindow *nativeWindow = surface.get(); | ||

| + | setupNativeWindowSizeFormatAndUsage(nativeWindow, &usageBits, !storingMetadataInDecodedBuffers()); /=> | ||

| + | nativeWindow->query(nativeWindow, NATIVE_WINDOW_MIN_UNDEQUEUED_BUFFERS,&minUndequeuedBuffers); | ||

| + | buffers = mBuffers[kPortIndexOutput(1)]; | ||

| + | native_window_set_buffer_count(nativeWindow, buffers.size()); | ||

| + | surface->getIGraphicBufferProducer()->allowAllocation(true); //// need to enable allocation when attaching | ||

| + | for each BufferInfo info in buffers[i] | ||

| + | storingMetadataInDecodedBuffers() | ||

| + | surface->attachBuffer(info.mGraphicBuffer->getNativeBuffer()); | ||

| + | if (!storingMetadataInDecodedBuffers()) { //(mPortMode[kPortIndexOutput] == IOMX::kPortModeDynamicANWBuffer)==true | ||

| + | for each BufferInfo info in buffers[i] | ||

| + | if (info.mStatus == BufferInfo::OWNED_BY_NATIVE_WINDOW) { | ||

| + | nativeWindow->cancelBuffer(nativeWindow, info.mGraphicBuffer->getNativeBuffer(), info.mFenceFd); | ||

| + | } | ||

| + | } | ||

| + | surface->getIGraphicBufferProducer()->allowAllocation(false); | ||

| + | } | ||

| + | mNativeWindow = nativeWindow; | ||

| + | mNativeWindowUsageBits = usageBits; | ||

| + | } | ||

| + | \=> ACodec::setupNativeWindowSizeFormatAndUsage( ANativeWindow *nativeWindow, int *finalUsage, reconnect) { | ||

| + | mOMXNode->getParameter(OMX_IndexParamPortDefinition, &def, sizeof(def)); | ||

| + | mOMXNode->getExtensionIndex("OMX.google.android.index.AndroidNativeBufferConsumerUsage", &index); | ||

| + | nativeWindow->query(nativeWindow, NATIVE_WINDOW_CONSUMER_USAGE_BITS, &usageBits) | ||

| + | mOMXNode->setParameter(index, ¶ms, sizeof(params)); | ||

| + | mOMXNode->getGraphicBufferUsage(kPortIndexOutput, &finalUsage ); | ||

| + | finalUsage |= GRALLOC_USAGE_PROTECTED| kVideoGrallocUsage | ||

| + | setNativeWindowSizeFormatAndUsage(nativeWindow, def.w, def.h, def.color, rotate, finalUsage, reconnect); | ||

| + | } | ||

</source> | </source> | ||

| 第111行: | 第166行: | ||

</source> | </source> | ||

| − | + | /frameworks/av/media/libstagefright/ACodec.cpp: | |

| − | + | ||

<source lang="c"> | <source lang="c"> | ||

| − | + | ACodec::ExecutingState::resume() | |

| − | + | { | |

| − | + | submitOutputBuffers(); | |

| − | + | for all input buffer in mCodec->mBuffers[kPortIndexInput].size(), | |

| − | + | postFillThisBuffer(info); | |

| − | + | } | |

| − | + | </source> | |

| − | + | ||

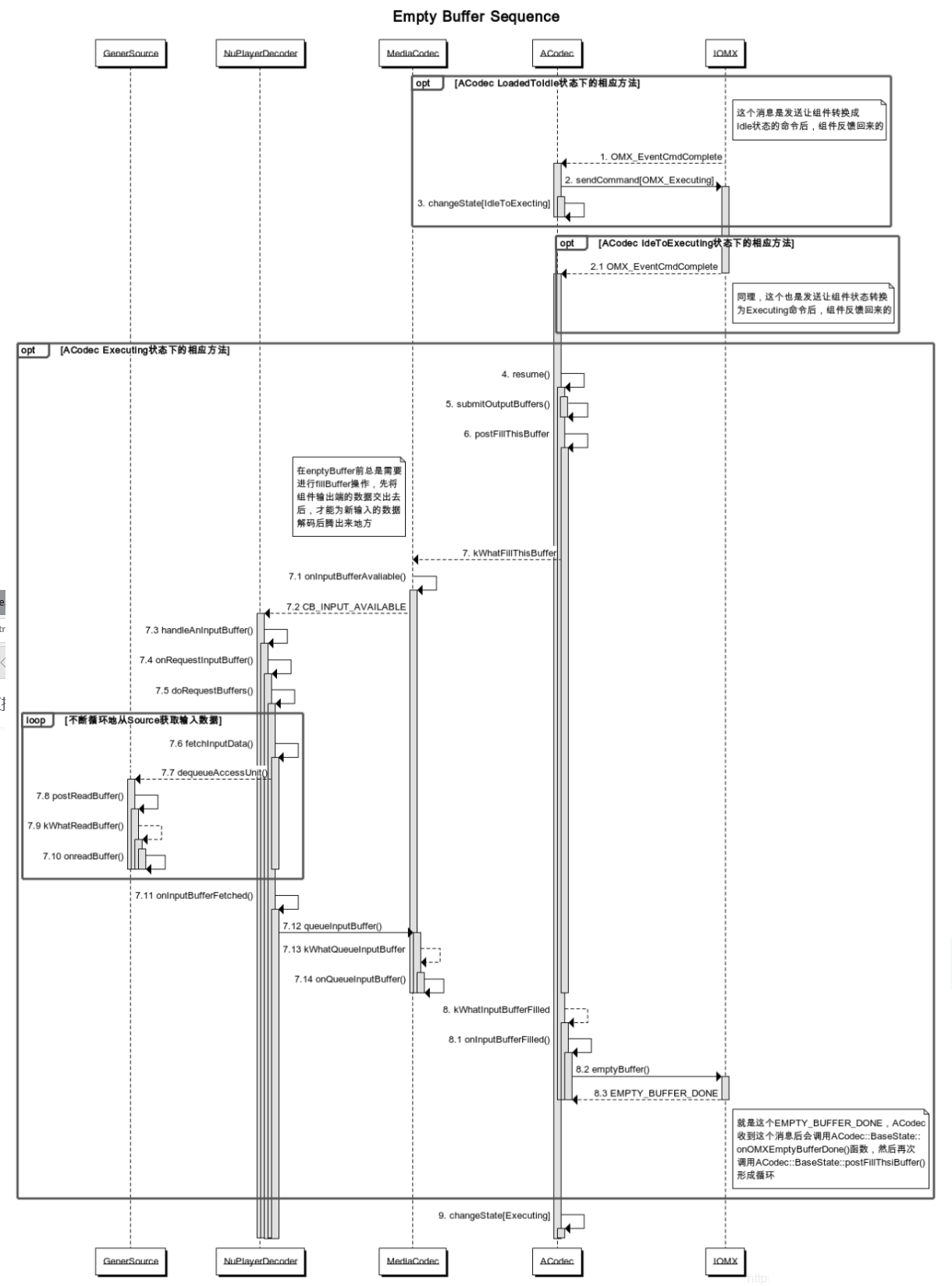

| + | 5. Decoder input: | ||

| + | [[文件:emptybuffer.png]] | ||

| + | <source lang="c"> | ||

| + | ACodec::IdleToExecutingState::onOMXEvent(OMX_EventCmdComplete) | ||

| + | mCodec->mExecutingState->resume(); | ||

| + | => ACodec::ExecutingState::resume() { | ||

| + | for each input port BufferInfo info in &mCodec->mBuffers[kPortIndexInput] | ||

| + | if(OWNED_BY_US) postFillThisBuffer(info); | ||

| + | } | ||

| + | => ACodec::postFillThisBUffer() | ||

| + | { | ||

| + | mCodec->mBufferChannel->fillThisBuffer(info->mBufferID); | ||

| + | info->mStatus = BufferInfo::OWNED_BY_UPSTREAM; | ||

| + | } | ||

| + | kWhatFillThisBuffer | ||

| + | MediaCodec::onInputBufferAvailable() | ||

| + | NuplayDecoder: handleAnInputBuffer(); | ||

| + | NuplayDecoder: onRequestInputBuffer() | ||

| + | NuplayDecoder: doRequestBuffers() | ||

| + | NuplayDecoder: fetchInputData() | ||

| + | NuplayDecoder: dequeueAccessUnit() | ||

| + | source::postRequestBuffer(kWhatReadBuffer) | ||

| + | source::onreadBuffer | ||

| + | Nuplay::onInputBufferFetched() | ||

| + | Nuplay:queueInputBuffer(kWhatQueueInputBuffer) | ||

| + | NullPlay::onQueueInputBuffer() | ||

| + | kWhatInputBufferFilled | ||

| + | ACodec::onInputBufferFilled() | ||

| + | ACodec::emptyBuffer() | ||

| + | ... | ||

| + | OMX::OnEmptyBufferDone(…) | ||

| + | ACodec::BaseState::onOMXEmptyBufferDone | ||

| + | |||

| + | |||

</source> | </source> | ||

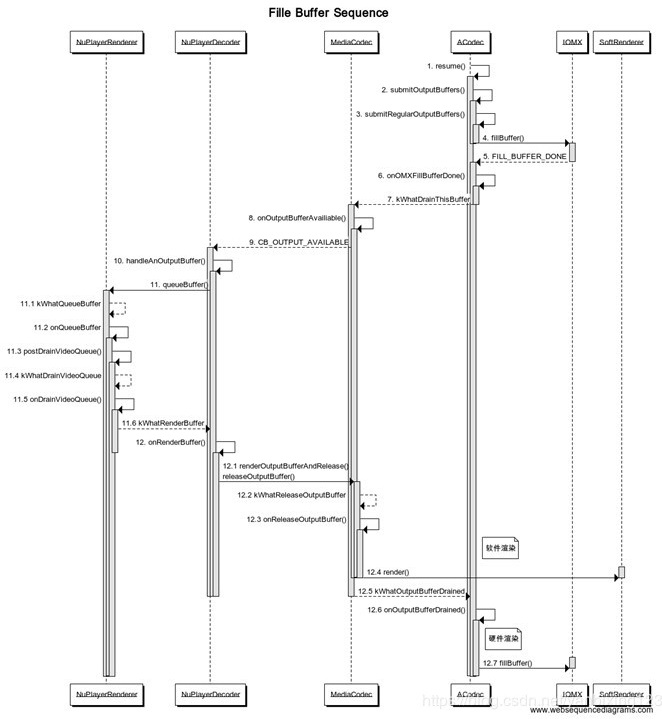

6. Decoder output | 6. Decoder output | ||

| − | [[文件: | + | [[文件:fillbuffer.png]] |

| + | |||

| + | |||

| + | |||

<source lang="c"> | <source lang="c"> | ||

| − | + | a. ACodec::ExecutingState::submitOutputBuffers() | |

| − | + | => ACodec::ExecutingState:: submitRegularOutputBuffers() | |

| − | + | { | |

| − | + | for all BufferInfo *info in mCodec->mBuffers[kPortIndexOutput].size(){ | |

| − | + | find buffuer that is OWNED_BY_US, then | |

| − | + | mCodec->fillBuffer(info) /=> | |

| − | + | } | |

| − | + | } | |

| − | + | ACodec::ExecutingState:: submitOutputMetaBuffers(); | |

| + | /=> ACodec::fillBuffer(BufferInfo *info) { | ||

| + | mOMXNode->fillBuffer(info->mBufferID, OMXBuffer::sPreset, info->mFenceFd); | ||

| + | or | ||

| + | <IOMXNode> mOMXNode->fillBuffer(info->mBufferID, info->mGraphicBuffer, info->mFenceFd); /=> | ||

| + | info->mStatus = BufferInfo::OWNED_BY_COMPONENT; | ||

| + | } | ||

| + | /=> /frameworks/av/media/libmedia/IOMX.cpp: class HpOMXNode : public HpInterface<BpOMXNode, LWOmxNode> { | ||

| + | fillBuffer(buffer_id buffer, const OMXBuffer &omxBuf, int fenceFd = -1) | ||

| + | { mBase->fillBuffer(buffer, omxBuf, fenceFd) } | ||

| + | => /frameworks/av/media/libstagefright/omx/OMXNodeInstance.cpp: OMXNodeInstance::fillBuffer(buffer, omxBuffer, fenceFd) | ||

| + | { | ||

| + | OMX_BUFFERHEADERTYPE *header = findBufferHeader(buffer, kPortIndexOutput); | ||

| + | if (omxBuffer.mBufferType == OMXBuffer::kBufferTypeANWBuffer) | ||

| + | updateGraphicBufferInMeta_l(kPortIndexOutput, omxBuffer.mGraphicBuffer, buffer, header); | ||

| + | mOutputBuffersWithCodec.add(header); | ||

| + | OMX_FillThisBuffer(mHandle, header); | ||

| + | => mHandle->FillThisBuffer(mHandle, header) /=> | ||

| + | =><project>/source/decoder: decoder.c ChagallHwDecOmx_image_constructor { | ||

| + | comp->FillThisBuffer = decoder_fill_this_buffer; | ||

| + | comp->dec->callbacks = {&OnEvent, &OnEmptyBufferDone, &OnFillBufferDone} /*defined in omx/OMXNodeInstance.cpp */ | ||

| + | } | ||

| + | /=>decoder_fill_this_buffer(OMX_IN OMX_HANDLETYPE hComponent, OMX_IN OMX_BUFFERHEADERTYPE *pBufferHeader) | ||

| + | { | ||

| + | OMX_DECODER *dec = GET_DECODER(hComponent); | ||

| + | if (dec->useExternalAlloc ) { | ||

| + | BUFFER* buff = HantroOmx_port_find_buffer(&dec->out, pBufferHeader); | ||

| + | if (dec->checkExtraBuffers) { | ||

| + | find dec->outputBufList[i] == NULL and != buff->bus_address | ||

| + | dec->codec->setframebuffer(dec->codec, buff, dec->out.def.nBufferCountMin); /=> | ||

| + | } | ||

| + | } else if (USE_ALLOC_PRIVATE) { | ||

| + | buff = HantroOmx_port_find_buffer(&dec->out, pBufferHeader); | ||

| + | bufPrivate = (ALLOC_PRIVATE*) pBufferHeader->pInputPortPrivate; | ||

| + | buff->bus_address = bufPrivate->nBusAddress; | ||

| + | } | ||

| + | /=> <project>/source/decoder:codec_h264.c | ||

| + | CODEC_PROTOTYPE *ChagallHwDecOmx_decoder_create_h264 { | ||

| + | this->base.setframebuffer = decoder_setframebuffer_h264; | ||

| + | } | ||

| + | |||

| + | CODEC_STATE decoder_setframebuffer_h264(CODEC_PROTOTYPE * this, BUFFER *buff, OMX_U32 available_buffers) | ||

| + | { | ||

| + | mem={buff->bus_data, buff->allocsize} | ||

| + | H264DecAddBuffer(this->instance, &mem); /=> | ||

| + | } | ||

| + | /=> h264decapi.c: H264DecAddBuffer(H264DecInst dec_inst, struct DWLLinearMem *info) { | ||

| + | //look into decoder source code | ||

| + | } | ||

| + | |||

| + | b. callback | ||

| + | |||

| + | OMXNodeInstance::OnFillBufferDone() | ||

| + | => OMXNodeInstance::mDispatcher->post(msg(omx_message::FILL_BUFFER_DONE, pBuffer)); | ||

| + | => CodecObserver :onMessages(FILL_BUFFER_DONE) => notify->post(FILL_BUFFER_DONE, "buffer") | ||

| + | => ACodec::BaseState::onOMXMessage(FILL_BUFFER_DONE) | ||

| + | => ACodec::BaseState::onOMXFillBufferDone(bufferID, rangeOffset, rangeLength, flags, timeUs, fenceFd) | ||

| + | { | ||

| + | BufferInfo *info = mCodec->findBufferByID(kPortIndexOutput, bufferID, &index); | ||

| + | info->mStatus = BufferInfo::OWNED_BY_US; | ||

| + | mCodec->notifyOfRenderedFrames(true) | ||

| + | mode = getPortMode(kPortIndexOutput); | ||

| + | case RESUBMIT_BUFFERS: | ||

| + | mCodec->fillBuffer(info); | ||

| + | buffer->setFormat(mCodec->mOutputFormat); | ||

| + | mCodec->mSkipCutBuffer->submit(buffer); | ||

| + | mCodec->mBufferChannel->drainThisBuffer(info->mBufferID, flags); | ||

| + | info->mStatus = BufferInfo::OWNED_BY_DOWNSTREAM; | ||

| + | case FREE_BUFFERS: | ||

| + | mCodec->freeBuffer(kPortIndexOutput, index); | ||

| + | } | ||

| + | |||

</source> | </source> | ||

| + | |||

| + | ref:https://blog.csdn.net/yanbixing123/article/details/88937106?utm_medium=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-1.nonecase&depth_1-utm_source=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-1.nonecase | ||

| + | ref: https://blog.csdn.net/yanbixing123/article/details/88925903#comments | ||

2020年6月15日 (一) 09:01的最后版本

- MediaPlayer calling flow

MediaPlayer mp = new MediaPlayer(); 1. mp.setDataSource(filePath); 2. mp.setDisplay(MediaFrameworkTest.mSurfaceView.getHolder()); 3. mp.prepare(); //获取和解码媒体数据 4. mp.start(); 5. FillBuffer 6. EmptyBuffer

1. setDataSource(filePath)

<MediaPlayerBase> mPlayer = Create form (playerType of source); // => new NuPlayerDriver(pid) { mLooper->setName("NuPlayerDriver Looper")->start(PRIORITY_AUDIO); mPlayer = new NuPlayer(pid); } //绑定Extractor、omx、codec2服务的death监听, //mPlayer->setDataSource(dataSource)

2. MediaPlayer.java: setDisplay(SurfaceHolder sh) or setSurface(Surface surface)

=> native void _setVideoSurface(Surface surface); => => /frameworks/base/media/jni/android_media_MediaPlayer.cpp: android_media_MediaPlayer_setVideoSurface(JNIEnv *env, jobject thiz, jobject jsurface) => setVideoSurface(JNIEnv *env, jobject thiz, jobject jsurface, jboolean mediaPlayerMustBeAlive) { sp<IGraphicBufferProducer> new_st = surface->getIGraphicBufferProducer(); MediaPlyer getMediaPlayer()->setVideoSurfaceTexture(new_st); } => libmediaplayerservice/MediaPlayerService.cpp: MediaPlayerService::Client::setVideoSurfaceTexture(<IGraphicBufferProducer>& bufferProducer) { sp<ANativeWindow> anw; anw = new Surface(bufferProducer, true /* controlledByApp */); nativeWindowConnect(anw.get(), "setVideoSurfaceTexture"); getPlayer()->setVideoSurfaceTexture(bufferProducer); mConnectedWindow = anw; //decoder mConnectedWindowBinder = binder(IInterface::asBinder(bufferProducer)); } => libmediaplayerservice/nuplayer/NuPlayerDriver.cpp: NuPlayerDriver::setVideoSurfaceTexture(&bufferProducer) => NuPlayer::setVideoSurfaceTextureAsync(<IGraphicBufferProducer> &bufferProducer) msg->post(kWhatSetVideoSurface, new Surface(bufferProducer, true )); => void NuPlayer::onMessageReceived(const sp<AMessage> &msg: kWhatSetVideoSurface) { surface = <Surface *>(msg->findObject("surface")->obj.get()); mVideoDecoder->setVideoSurface(surface); ==>msg(kWhatSetVideoSurface, surface)->postAndAwaitResponse(); performSetSurface(surface); mDeferredActions.push_back(new FlushDecoderAction(FLUSH_CMD_FLUSH /* audio */, FLUSH_CMD_SHUTDOWN /* video */)); mDeferredActions.push_back(new SetSurfaceAction(surface)); mDeferredActions.push_back(new ResumeDecoderAction()) processDeferredActions(); } => NuPlayer::Decoder::onMessageReceived(const sp<AMessage> &msg, kWhatSetVideoSurface) { nativeWindowDisconnect(surface.get(), "kWhatSetVideoSurface(surface)"); mCodec->setSurface(surface); nativeWindowConnect(mSurface.get(), "kWhatSetVideoSurface(mSurface)"); mSurface = surface; } => /frameworks/av/media/libstagefright/ACodec.cpp ACodec::setSurface(const sp<Surface> &surface) { msg(kWhatSetSurface, surface)->postAndAwaitResponse(); } => ACodec::BaseState::onMessageReceived(const sp<AMessage> &msg, kWhatSetSurface) { mCodec->handleSetSurface(static_cast<Surface *>(obj.get())); } => ACodec::handleSetSurface(const sp<Surface> &surface) { ANativeWindow *nativeWindow = surface.get(); setupNativeWindowSizeFormatAndUsage(nativeWindow, &usageBits, !storingMetadataInDecodedBuffers()); /=> nativeWindow->query(nativeWindow, NATIVE_WINDOW_MIN_UNDEQUEUED_BUFFERS,&minUndequeuedBuffers); buffers = mBuffers[kPortIndexOutput(1)]; native_window_set_buffer_count(nativeWindow, buffers.size()); surface->getIGraphicBufferProducer()->allowAllocation(true); //// need to enable allocation when attaching for each BufferInfo info in buffers[i] storingMetadataInDecodedBuffers() surface->attachBuffer(info.mGraphicBuffer->getNativeBuffer()); if (!storingMetadataInDecodedBuffers()) { //(mPortMode[kPortIndexOutput] == IOMX::kPortModeDynamicANWBuffer)==true for each BufferInfo info in buffers[i] if (info.mStatus == BufferInfo::OWNED_BY_NATIVE_WINDOW) { nativeWindow->cancelBuffer(nativeWindow, info.mGraphicBuffer->getNativeBuffer(), info.mFenceFd); } } surface->getIGraphicBufferProducer()->allowAllocation(false); } mNativeWindow = nativeWindow; mNativeWindowUsageBits = usageBits; } \=> ACodec::setupNativeWindowSizeFormatAndUsage( ANativeWindow *nativeWindow, int *finalUsage, reconnect) { mOMXNode->getParameter(OMX_IndexParamPortDefinition, &def, sizeof(def)); mOMXNode->getExtensionIndex("OMX.google.android.index.AndroidNativeBufferConsumerUsage", &index); nativeWindow->query(nativeWindow, NATIVE_WINDOW_CONSUMER_USAGE_BITS, &usageBits) mOMXNode->setParameter(index, ¶ms, sizeof(params)); mOMXNode->getGraphicBufferUsage(kPortIndexOutput, &finalUsage ); finalUsage |= GRALLOC_USAGE_PROTECTED| kVideoGrallocUsage setNativeWindowSizeFormatAndUsage(nativeWindow, def.w, def.h, def.color, rotate, finalUsage, reconnect); }

3. prepare

NuPlayer::GenericSource::onPrepareAsync(){ dataSource = DataSourceFactory::CreateFromURI(uri) or new FileSource(mFd, mOffset, mLength); <IMediaExtractor> ex = mediaExService->makeExtractor() => MediaExtractorFactory::CreateFromService(localSource, mime); => creator = sniff(source, &confidence, &meta, &freeMeta, plugin, &creatorVersion);==> find best creator from ExtractorPlugin gPlugins list.

4. start

void NuPlayer::start()==> (new AMessage(kWhatStart, this))->post(); NuPlayer::onMessageReceived(const sp<AMessage> &msg) { case kWhatStart: onStart();} => NuPlayer::onStart(int64_t startPositionUs, MediaPlayerSeekMode mode) => mSource->start(); hasVideo = (mSource->getFormat(false /* audio */) != NULL); mRenderer = new Renderer(mAudioSink, mMediaClock, notify, flags); mRendererLooper = new ALooper; mRendererLooper->start(false, false, ANDROID_PRIORITY_AUDIO); mRenderer->setPlaybackSettings(mPlaybackSettings); mRendererLooper->registerHandler(mRenderer); mRenderer->setVideoFrameRate(rate); postScanSources()=> (new AMessage(kWhatScanSources, this))->post(); => NuPlayer::onMessageReceived( kWhatScanSources) { instantiateDecoder(false, &mVideoDecoder) { notify = new AMessage(kWhatVideoNotify, this); *decoder = new Decoder(notify, mSource, mPID, mUID, mRenderer, mSurface, mCCDecoder); format->setInt32("auto-frc", 1);// enable FRC if high-quality AV sync is requested (*decoder)->init(); (*decoder)->configure(format); //buffer <ABuffer> inputBufs = (*decoder)->getInputBuffers(); for each buffer in inputBufs[i], mbuf = new MediaBuffer(buffer->data(), buffer->size()); mediaBufs.push(mbuf); mSource->setBuffers(audio, mediaBufs); } } NuPlayer::Decoder::onConfigure(const sp<AMessage> &format) { mComponentName = format->findString("mime")="audio/ video/xxx"+"decoder"; mCodec = MediaCodec::CreateByType(mCodecLooper, mime, false /* encoder */, NULL /* err */, mPid); // disconnect from surface as MediaCodec will reconnect mCodec->configure(format, mSurface, NULL /* crypto */, 0 /* flags */); rememberCodecSpecificData(format); mCodec->getOutputFormat(&mOutputFormat); mCodec->getInputFormat(&mInputFormat)); //find width and height from mOutputFormat() mCodec->setCallback(reply); mCodec->start(); releaseAndResetMediaBuffers(); } mCodec->initiateAllocateComponent(format);=> msg->setWhat(kWhatAllocateComponent);=> msg->setWhat(kWhatAllocateComponent); ACodec::UninitializedState::onAllocateComponent(msg) { OMXClient client; client.connect(); <IOMX> omx = client.interface(); OMXCodec::findMatchingCodecs(mime, encoder, &matchingCodecs); omx->allocateNode(componentName.c_str(), observer, &node); mCodec->mNotify->("CodecBase::kWhatComponentAllocated") }

/frameworks/av/media/libstagefright/ACodec.cpp:

ACodec::ExecutingState::resume() { submitOutputBuffers(); for all input buffer in mCodec->mBuffers[kPortIndexInput].size(), postFillThisBuffer(info); }

ACodec::IdleToExecutingState::onOMXEvent(OMX_EventCmdComplete) mCodec->mExecutingState->resume(); => ACodec::ExecutingState::resume() { for each input port BufferInfo info in &mCodec->mBuffers[kPortIndexInput] if(OWNED_BY_US) postFillThisBuffer(info); } => ACodec::postFillThisBUffer() { mCodec->mBufferChannel->fillThisBuffer(info->mBufferID); info->mStatus = BufferInfo::OWNED_BY_UPSTREAM; } kWhatFillThisBuffer MediaCodec::onInputBufferAvailable() NuplayDecoder: handleAnInputBuffer(); NuplayDecoder: onRequestInputBuffer() NuplayDecoder: doRequestBuffers() NuplayDecoder: fetchInputData() NuplayDecoder: dequeueAccessUnit() source::postRequestBuffer(kWhatReadBuffer) source::onreadBuffer Nuplay::onInputBufferFetched() Nuplay:queueInputBuffer(kWhatQueueInputBuffer) NullPlay::onQueueInputBuffer() kWhatInputBufferFilled ACodec::onInputBufferFilled() ACodec::emptyBuffer() ... OMX::OnEmptyBufferDone(…) ACodec::BaseState::onOMXEmptyBufferDone

a. ACodec::ExecutingState::submitOutputBuffers() => ACodec::ExecutingState:: submitRegularOutputBuffers() { for all BufferInfo *info in mCodec->mBuffers[kPortIndexOutput].size(){ find buffuer that is OWNED_BY_US, then mCodec->fillBuffer(info) /=> } } ACodec::ExecutingState:: submitOutputMetaBuffers(); /=> ACodec::fillBuffer(BufferInfo *info) { mOMXNode->fillBuffer(info->mBufferID, OMXBuffer::sPreset, info->mFenceFd); or <IOMXNode> mOMXNode->fillBuffer(info->mBufferID, info->mGraphicBuffer, info->mFenceFd); /=> info->mStatus = BufferInfo::OWNED_BY_COMPONENT; } /=> /frameworks/av/media/libmedia/IOMX.cpp: class HpOMXNode : public HpInterface<BpOMXNode, LWOmxNode> { fillBuffer(buffer_id buffer, const OMXBuffer &omxBuf, int fenceFd = -1) { mBase->fillBuffer(buffer, omxBuf, fenceFd) } => /frameworks/av/media/libstagefright/omx/OMXNodeInstance.cpp: OMXNodeInstance::fillBuffer(buffer, omxBuffer, fenceFd) { OMX_BUFFERHEADERTYPE *header = findBufferHeader(buffer, kPortIndexOutput); if (omxBuffer.mBufferType == OMXBuffer::kBufferTypeANWBuffer) updateGraphicBufferInMeta_l(kPortIndexOutput, omxBuffer.mGraphicBuffer, buffer, header); mOutputBuffersWithCodec.add(header); OMX_FillThisBuffer(mHandle, header); => mHandle->FillThisBuffer(mHandle, header) /=> =><project>/source/decoder: decoder.c ChagallHwDecOmx_image_constructor { comp->FillThisBuffer = decoder_fill_this_buffer; comp->dec->callbacks = {&OnEvent, &OnEmptyBufferDone, &OnFillBufferDone} /*defined in omx/OMXNodeInstance.cpp */ } /=>decoder_fill_this_buffer(OMX_IN OMX_HANDLETYPE hComponent, OMX_IN OMX_BUFFERHEADERTYPE *pBufferHeader) { OMX_DECODER *dec = GET_DECODER(hComponent); if (dec->useExternalAlloc ) { BUFFER* buff = HantroOmx_port_find_buffer(&dec->out, pBufferHeader); if (dec->checkExtraBuffers) { find dec->outputBufList[i] == NULL and != buff->bus_address dec->codec->setframebuffer(dec->codec, buff, dec->out.def.nBufferCountMin); /=> } } else if (USE_ALLOC_PRIVATE) { buff = HantroOmx_port_find_buffer(&dec->out, pBufferHeader); bufPrivate = (ALLOC_PRIVATE*) pBufferHeader->pInputPortPrivate; buff->bus_address = bufPrivate->nBusAddress; } /=> <project>/source/decoder:codec_h264.c CODEC_PROTOTYPE *ChagallHwDecOmx_decoder_create_h264 { this->base.setframebuffer = decoder_setframebuffer_h264; } CODEC_STATE decoder_setframebuffer_h264(CODEC_PROTOTYPE * this, BUFFER *buff, OMX_U32 available_buffers) { mem={buff->bus_data, buff->allocsize} H264DecAddBuffer(this->instance, &mem); /=> } /=> h264decapi.c: H264DecAddBuffer(H264DecInst dec_inst, struct DWLLinearMem *info) { //look into decoder source code } b. callback OMXNodeInstance::OnFillBufferDone() => OMXNodeInstance::mDispatcher->post(msg(omx_message::FILL_BUFFER_DONE, pBuffer)); => CodecObserver :onMessages(FILL_BUFFER_DONE) => notify->post(FILL_BUFFER_DONE, "buffer") => ACodec::BaseState::onOMXMessage(FILL_BUFFER_DONE) => ACodec::BaseState::onOMXFillBufferDone(bufferID, rangeOffset, rangeLength, flags, timeUs, fenceFd) { BufferInfo *info = mCodec->findBufferByID(kPortIndexOutput, bufferID, &index); info->mStatus = BufferInfo::OWNED_BY_US; mCodec->notifyOfRenderedFrames(true) mode = getPortMode(kPortIndexOutput); case RESUBMIT_BUFFERS: mCodec->fillBuffer(info); buffer->setFormat(mCodec->mOutputFormat); mCodec->mSkipCutBuffer->submit(buffer); mCodec->mBufferChannel->drainThisBuffer(info->mBufferID, flags); info->mStatus = BufferInfo::OWNED_BY_DOWNSTREAM; case FREE_BUFFERS: mCodec->freeBuffer(kPortIndexOutput, index); }

ref:https://blog.csdn.net/yanbixing123/article/details/88937106?utm_medium=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-1.nonecase&depth_1-utm_source=distribute.pc_relevant.none-task-blog-BlogCommendFromMachineLearnPai2-1.nonecase

ref: https://blog.csdn.net/yanbixing123/article/details/88925903#comments